Abstract: High-performance networking and load balancing are essential for the efficient operation of large-scale data centers, which support the computing needs of enterprises and organizations worldwide. This article explores the fundamental components of data center networking, including switches, routers, and IP-based connectivity, and examines the role of load balancing in optimizing traffic distribution, enhancing reliability, and ensuring system resilience. Key strategies such as hardware-based, software-based, virtual, and application-level load balancing are discussed in detail alongside emerging technologies like SDN, AI-driven automation, and edge computing. The article also addresses the challenges of scalability, network latency, and security, emphasizing the importance of advanced monitoring tools and dynamic load balancing algorithms. Through real-world case studies, it demonstrates how innovative techniques and performance optimization strategies are transforming data center operations, paving the way for future trends in networking and digital infrastructure.

Keywords: High-Performance Networking, Load Balancing, Data Centers, Software-Defined Networking (SDN), Network Virtualization, AI-Driven Automation, Edge Computing, Network Scalability, Traffic Distribution, System Resilience, Network Latency, Data Center Monitoring, Fault Tolerance, Dynamic Load Balancing Algorithms, Digital Infrastructure

High-performance networking and load balancing are critical components of modern large-scale data centers, which are essential for supporting the vast computing needs of enterprises and organizations worldwide. Data centers, which serve as centralized hubs for computing resources and applications, rely heavily on these technologies to ensure optimal performance, reliability, and efficiency. With over 7 million data centers operating globally, each tasked with managing substantial volumes of data and providing seamless application services, the role of networking and load balancing has never been more significant.[1]

Networking fundamentals in data centers include a robust infrastructure composed of servers, storage systems, switches, and routers, all connected to facilitate data flow. Understanding these fundamentals is crucial for designing networks that effectively support organizational goals. Key components like IP addresses and network architecture are vital in setting up efficient connectivity within these facilities. Moreover, Transmission Control Protocol/Internet Protocol (TCP/IP) and the aggregation of access layer uplinks ensure high-speed data transfer and scalability, laying the groundwork for sophisticated networking and load balancing strategies.[2][3]

Load balancing is a pivotal technique for managing network traffic and maintaining high availability in data centers. By distributing traffic evenly across multiple servers, load balancing prevents server overload, enhances application performance, and contributes to system resilience. This method can be implemented at various levels—network, transport, or application—using both hardware and software-based solutions. Advanced load balancing techniques, including virtual and application-level load balancing, allow data centers to customize their infrastructure to meet specific needs and optimize resource utilization effectively.[4]

The continuous evolution of data centers necessitates ongoing innovation in performance optimization strategies and monitoring tools. Emerging trends like Software-Defined Networking (SDN), artificial intelligence, and edge computing are transforming network management, enabling more dynamic, scalable, and secure data environments. These innovations, alongside case studies demonstrating the practical application of advanced techniques, underscore the importance of adaptive strategies in maintaining high-performance networking. As data center demands grow, these technologies will play a crucial role in ensuring that networks remain efficient, reliable, and capable of meeting the challenges of modern digital applications.[5][6]

Data Centers

Data centers are essential components of modern enterprises, serving as centralized locations for computing resources and applications[1]. They are designed to support business applications and provide services such as data storage, management, and distribution. Today, there are reportedly more than 7 million data centers worldwide, with practically every business and government entity maintaining its own or having access to another's data center[1].

A data center consists of various components, including servers, storage systems, switches, routers, and security devices[2][3]. These components are connected through networks that facilitate the collection and distribution of data, a process known as data center networking[4]. The infrastructure of a data center is housed in secure facilities, typically organized by halls, rows, and racks. This infrastructure is supported by significant power subsystems, uninterruptible power supplies (UPS), ventilation and cooling systems, backup generators, and cabling plants[2].

To ensure high availability and application performance, data centers employ mechanisms such as automatic failover and load balancing, which provide application resiliency[2]. Load balancing helps distribute network or application traffic across multiple servers, ensuring no single server becomes overwhelmed and that performance remains optimal[4].

The most widely adopted standard for data center design and infrastructure is ANSI/TIA-942, which provides guidelines for building and maintaining these complex systems[2]. The efficient processing, storage, and distribution of large amounts of data are critical functions that data centers perform, making them indispensable to the operation of modern enterprises[3].

Networking Fundamentals

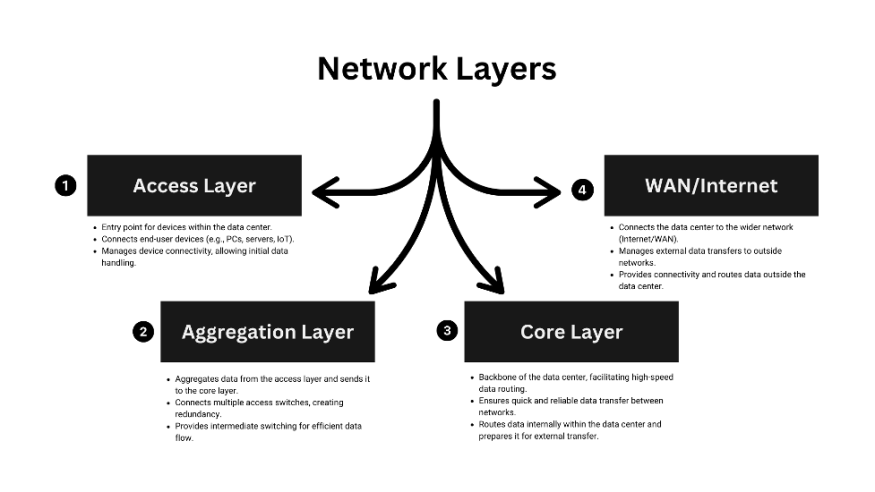

Understanding networking fundamentals is crucial for grasping the complexities of large-scale data centers. At the core, networking involves several key components and principles that establish the framework for connectivity and communication within a data center. Essential networking components include IP addresses, which uniquely identify devices within an Internet Protocol (IP) network, defining their host network and location[5]. A computer network architecture provides the theoretical framework of a network, dictating the layout, communication protocols, and connectivity patterns necessary for efficient operation[6].

Networks are designed to serve the connectivity requirements of applications, which in turn address the business needs of an organization[7]. This relationship underscores the importance of understanding the modern data center's needs and the network topology adapted to meet these requirements. Data center networking incorporates computing services such as switches, routers, load balancing, and analytics software, enabling the effective collection and distribution of data[4]. These networks are structured to handle diverse types, including access networks, data center networks, and Wide-Area Networks (WANs), each serving specific functions[6].

The infrastructure of a data center typically comprises components such as switches, storage systems, servers, routers, and security devices, all housed within secure facilities supported by power and cooling systems, backup generators, and cabling plants[2]. This organized setup ensures the efficient operation and management of the data center's extensive networking demands.

Moreover, understanding the Transmission Control Protocol/Internet Protocol (TCP/IP) is vital for anyone working within IT or networking environments[8]. The aggregation layer within data centers, connected by numerous access layer uplinks, plays a crucial role in aggregating thousands of sessions and ensuring a high-speed switching fabric with a significant forwarding rate[9]. Mastery of these fundamental networking concepts lays the groundwork for exploring more advanced topics in high-performance networking and load balancing in large-scale data centers.

Load Balancing Concepts

Load balancing is a crucial technique in managing network traffic, particularly within large-scale data centers. It involves distributing network traffic evenly across a pool of resources, ensuring that applications can efficiently process millions of users simultaneously and deliver the correct data, such as text, videos, and images, reliably and swiftly[10]. This method is essential for optimizing network performance, enhancing reliability, and increasing capacity by distributing demand among multiple servers and compute resources[11].

By preventing the overloading or crashing of any single server, load balancing contributes to fault tolerance and redundancy, thereby offering a robust mechanism to handle failures[12]. The implementation of load balancing can occur at various levels, including network, transport, or application, depending on the complexity and type of the requests being processed[12].

In addition to enhancing performance and reliability, load balancing plays a vital role in designing highly available infrastructures. Various types of load balancers can be deployed to meet specific network needs, each differing in storage capabilities, functionalities, and complexities[11]. This adaptability allows for a tailored approach to managing data center resources, ensuring that the network architecture aligns with the specific connectivity requirements of modern applications and their supporting business objectives[7].

Load Balancing Techniques

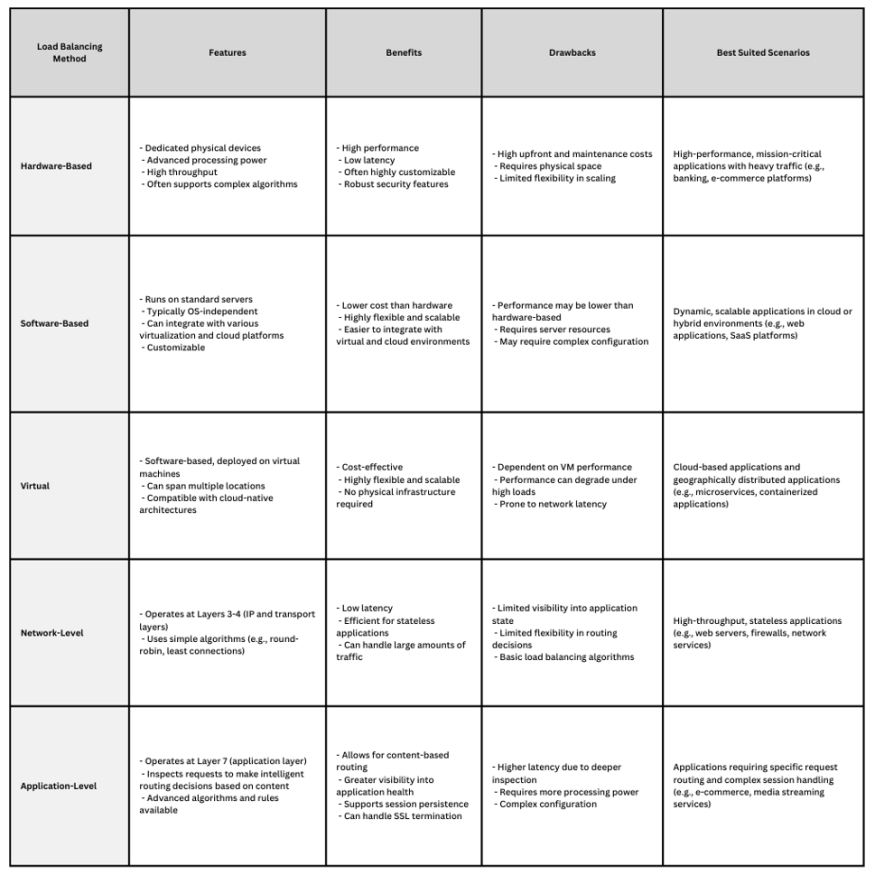

Load balancing is a critical technique utilized in large-scale data centers to distribute network traffic efficiently across a pool of resources, ensuring high performance, reliability, and availability of applications and services[10][12]. There are several methods and strategies for implementing load balancing, each catering to different requirements and scales.

Hardware-Based Load Balancing

Hardware-based load balancers are physical appliances designed to manage and distribute gigabytes of traffic securely to multiple servers. These devices are typically housed in data centers and can employ virtualization to create multiple digital or virtual load balancers that can be centrally managed[10][11]. While they offer high performance and reliability, the cost associated with purchasing and maintaining these appliances can be significant, often requiring expert consultants for management[11].

Software-Based Load Balancing

Software-based load balancing employs software applications or services to distribute incoming network traffic among servers. This approach is often more flexible than hardware-based solutions, as it allows for easy scalability and adaptation to changing network requirements by adding more software instances as needed[11][13]. Software load balancers can be deployed on standard servers and are generally more cost-effective, making them suitable for a wide range of organizations[13].

Virtual Load Balancing

Virtual load balancing involves the use of virtual machines or instances to perform load balancing tasks. This method combines the flexibility of software-based solutions with the high performance of hardware-based systems, allowing for scalable and efficient distribution of network traffic[10]. Virtual load balancers are particularly useful in cloud environments, where resources can be dynamically allocated and managed according to demand.

Network-Level Load Balancing

Network-level load balancing operates at the network layer, distributing traffic based on IP address or protocol type[12]. This method is suitable for managing large volumes of traffic and provides fault tolerance and redundancy by directing traffic to multiple servers across different network paths[12][14]. Network-level load balancers ensure that no single server becomes overloaded, thus maintaining the overall stability and performance of the network.

Application-Level Load Balancing

Application-level load balancing operates at the application layer, distributing requests based on specific criteria such as URL, session data, or application-specific information[12]. This technique allows for more granular control over traffic distribution, enabling the optimization of application performance by routing requests to the most appropriate server based on its current load and capability[14].

By implementing these various load balancing techniques, organizations can optimize the performance and reliability of their large-scale data center infrastructures, ensuring that applications and services remain available and responsive to users[10][11][13].

Performance Optimization Strategies

In large-scale data centers, performance optimization is crucial to efficiently manage resources and deliver high-speed services to a multitude of users. Effective load balancing plays a pivotal role in this optimization by distributing network traffic evenly across servers, thereby enhancing application performance through improved response times and reduced network latency[10][11].

One of the key strategies for performance optimization is the implementation of dynamic load balancing algorithms. These algorithms assess the current state of servers before distributing traffic, ensuring that resources are utilized optimally[10]. By sending traffic to servers with the quickest response times, these algorithms provide faster service for users and ensure that resources are not overburdened[15].

Another critical component is the breakdown of tasks into subtasks, which allows for more efficient processing and better scalability[16]. This method, although complex to implement, facilitates more effective utilization of available resources, making it particularly beneficial in environments where task completion times are variable.

Utilizing advanced network architectures also contributes significantly to performance optimization. This includes leveraging technologies like Global Server Load Balancing (GSLB), which directs traffic based on geographical location, ensuring that users connect to the nearest data center for the quickest possible service[17]. Moreover, integrating server load balancers with features like CPU and power redundancy enhances reliability and minimizes downtime, which is essential for maintaining high availability in data centers[9].

Additionally, monitoring and management tools are vital for identifying bottlenecks and inefficiencies in the network. By continuously evaluating server performance and adjusting traffic distribution accordingly, these tools help maintain an optimal balance of load across the network infrastructure[15].

Challenges in High Performance Networking

High performance networking in large-scale data centers presents several challenges that need to be addressed to ensure optimal functionality. One of the primary concerns is the scalability of the network. As data centers grow, they must efficiently handle increasing amounts of data traffic without compromising performance. Scalability is crucial because static load balancing algorithms, although efficient, may still fall short for very large computing centers due to their inherent complexity and the NP-hard nature of optimal task scheduling[16].

Another significant challenge is network latency, which can adversely affect the speed and efficiency of applications running in the data center. Load balancing plays a critical role in reducing latency by distributing network traffic evenly across multiple servers, thus enhancing application performance and ensuring that no single server is overwhelmed[10][14].

Security is also a major concern in high performance networking. Traditional flat network architectures with perimeter firewalls are inadequate in the context of modern data centers, where traffic is predominantly east-west. This creates a need for more sophisticated security measures that can handle the complexity and dynamism of cloud and mobile environments[1]. Ensuring that sensitive data is accessed only by authorized users while maintaining high performance is a delicate balance that must be managed carefully[18].

Lastly, user privacy issues and legal challenges also complicate the networking landscape in data centers. The increasing reliance on mobile enterprise computing raises questions about data accessibility and the implications of law enforcement access to stored data, which must be addressed to protect user privacy and comply with legal standards[4].

Addressing these challenges requires a comprehensive approach that includes advanced load balancing techniques, enhanced security protocols, and strategies for scalability and latency reduction to maintain high performance in large-scale data centers.

Monitoring and Management Tools

In large-scale data centers, monitoring and management tools play a crucial role in maintaining high performance and reliability. These tools are essential for tracking the performance of both hardware and software components, allowing administrators to identify and address issues promptly. Advanced monitoring tools can help optimize resource allocation by providing real-time data on system performance and workload distribution[1]. Regular maintenance through these tools ensures the smooth operation of data centers by performing routine checks and updates on infrastructure[1].

Data Center Infrastructure Management (DCIM) software is a popular choice among businesses looking to manage their physical data center components effectively. This software aids in the comprehensive tracking and management of infrastructure, enabling more efficient use of resources and improved performance metrics[3]. By implementing robust monitoring systems, data centers can proactively prevent downtime and ensure that all systems operate within their optimal parameters.

Redundancy is another key aspect of monitoring and management in data centers. By employing redundant systems for power, cooling, and networking, data centers can eliminate single points of failure, thus enhancing their resilience and reliability[1]. Monitoring tools can quickly detect failures in any of these systems, triggering automatic switchover to backup systems to maintain uninterrupted service.

Additionally, specialized software agents installed on servers can continuously assess the availability of resources such as CPU and memory. This information is queried by load balancers to distribute traffic more effectively, ensuring efficient server utilization and maintaining high service performance[15]. Such integration between monitoring tools and load balancing systems facilitates a seamless and responsive data center environment.

Future Trends and Innovations

The future of high-performance networking and load balancing in large-scale data centers is poised to be shaped by several emerging trends and innovations. As the demands for cloud computing, big data analytics, and online services continue to grow, the need for efficient and scalable data center networks becomes more critical than ever[19].

One significant trend is the increasing adoption of Software-Defined Networking (SDN). SDN allows for more flexible and programmable network architectures by decoupling the control plane from the data plane, which enables dynamic adjustment of network paths and resources according to real-time needs[5]. This flexibility is crucial in optimizing network performance and efficiently managing data flows within large-scale data centers.

Another emerging innovation is the use of artificial intelligence (AI) and automation to enhance network operations. AI-driven solutions can predict traffic patterns and potential network failures, allowing for proactive management and reduction of downtime[5]. Automation, in tandem with AI, enables the creation of autonomous IT operations, thereby improving the competitiveness of organizations by ensuring seamless network performance.

Edge computing is also becoming an integral part of data center networks, especially in supporting applications requiring low latency and high bandwidth. By processing data closer to the source or edge of the network, edge computing reduces the burden on central data centers and enhances the overall efficiency and performance of the network[19].

In addition to technological advancements, the importance of cybersecurity within data centers continues to escalate. As networks become more complex, ensuring secure data transmission and protecting against cyber threats are paramount. Innovations in encryption technologies and network security protocols are essential to safeguard sensitive data and maintain trust in data center operations[19].

Finally, the integration of emerging technologies like blockchain can offer decentralized and secure solutions for data management and transactions across network systems. By leveraging the distributed nature of blockchain, data center networks can potentially enhance their resilience and transparency in data handling[8].

Case Studies and Real-World Examples

In recent years, significant advancements have been made in the field of high-performance networking and load balancing within large-scale data centers. These developments have been driven by the need to manage efficiently the immense data flows produced by various applications, each demanding different levels of network transmission performance[19]. Real-world examples of successful implementations in data centers provide valuable insights into the application of theoretical concepts in practical environments.

One notable case study involves the innovative flow optimization strategies that have been actively proposed by researchers. These strategies aim to enhance the performance and efficiency of data center networks (DCNs) through techniques such as load balancing, congestion control, and routing optimization[19]. The implementation of these strategies has demonstrated the potential to improve network performance significantly, allowing data centers to handle the increasing demands of modern applications.

Another example of practical implementation is the use of malleable algorithms in large-scale computing clusters. These algorithms are capable of adapting to fluctuating processor availability during execution, which is crucial for maintaining system performance in the event of component failures[16]. By incorporating these adaptable algorithms, data centers can ensure more robust and resilient operations, even under challenging conditions.

The integration of AI and automation has also been highlighted as a forward-thinking approach by IT leaders to enhance competitiveness and efficiency in network management[5]. By leveraging these technologies, data centers can automate routine tasks, thus reducing human error and optimizing resource allocation. This has proven to be a critical factor in maintaining high performance in increasingly complex network environments.

These case studies underscore the importance of continuous innovation and adaptation in the field of high-performance networking. As data centers continue to evolve, the lessons learned from these real-world examples will guide future developments, ensuring that networks remain capable of meeting the growing demands of digital organizations.

References

[1] Palo Alto Networks. (2024). What is a Data Center? Palo Alto Networks. https://www.paloaltonetworks.com/cyberpedia/what-is-a-data-center.html

[2] Cisco Systems, Inc. (2023, October 27). What Is a Data Center? Cisco Systems, Inc. https://www.cisco.com/c/en/us/solutions/data-center-virtualization/what-is-a-data-center.html

[3] Sunbird Software, Inc. (2024). Data Center Components. Sunbird Software, Inc. https://www.sunbirddcim.com/glossary/data-center-components

[4] Enterprise Engineering Solutions. (2022). 7 Important Datacenter Networking Challenges You Would Loved to Know in 2022. EES Corporation. https://www.eescorporation.com/datacenter-networking-challenges/

[5] IBM. (2024, July 1). What is computer networking? IBM. https://www.ibm.com/topics/networking

[6] Kentik. (n.d.). Network Architecture Explained: Understanding the Basics of Modern Networks. Kentik. https://www.kentik.com/kentipedia/network-architecture/

[7] Dutt, D. G. (2024). BGP in the Data Center. O'Reilly Media, Inc. https://www.oreilly.com/library/view/bgp-in-the/9781491983416/ch01.html

[8] Choudhary, V. (2024, July 22). Top 50 Plus Networking Interview Questions and Answers for 2024. GeeksforGeeks. https://www.geeksforgeeks.org/networking-interview-questions/

[9] Cisco. (2012, November 27). Cisco Data Center Infrastructure 2.5 Design Guide. Cisco. https://www.cisco.com

[10] Amazon Web Services. (2024). What is load balancing? Amazon Web Services. https://aws.amazon.com/what-is-load-balancing/

[11] Yasar, K., & Irei, A. (2023, January). Load balancing. TechTarget. https://www.techtarget.com/searchnetworking/definition/load-balancing

[12] LinkedIn. (2024, August 12). What are the benefits and challenges of using cloud services for load balancing and distributed computing? LinkedIn. Retrieved from https://www.linkedin.com/advice/0/what-benefits-challenges-using-cloud-services

[13] anuupadhyay. (2024, October 4). Load Balancer – System Design Interview Question. GeeksforGeeks. https://www.geeksforgeeks.org/load-balancer-system-design-interview-question/

[14] Cloudflare. (n.d.). What is load balancing? | How load balancers work. Cloudflare. https://www.cloudflare.com/learning/performance/what-is-load-balancing/

[15] Cloudflare. (n.d.). Types of load balancing algorithms. Cloudflare. https://www.cloudflare.com/learning/performance/types-of-load-balancing-algorithms/

[16] Wikipedia contributors. (n.d.). Load balancing (computing). Wikipedia. https://en.wikipedia.org/wiki/Load_balancing_(computing)

[17] Toomey, D. (2017, June 2). Mr Worldwide presents: A brief overview of global server load balancing (GSLB). Edgenexus. https://www.edgenexus.io/2017/06/02/

[18] Winkelman, R. (2013). Chapter 1: What is a Network? Florida Center for Instructional Technology, College of Education, University of South Florida. https://fcit.usf.edu/network/chap1/chap1.htm

[19] Elsevier. (2020). There was a problem providing the content you requested. ScienceDirect. https://www.sciencedirect.com/

About the Author

Ishan Bhatt is a distinguished Network Engineer at Google, specializing in high-performance networking solutions, load balancing, and cloud computing for large-scale infrastructures. With extensive experience in managing complex networking environments, Ishan leads initiatives that integrate advanced technologies like SDN, virtualization, and AI-driven automation.

© 2025 ScienceTimes.com All rights reserved. Do not reproduce without permission. The window to the world of Science Times.