Ramanakar Danda is an internationally recognized expert in Artificial Intelligence (AI) and Machine Learning (ML), with a particular focus on the healthcare sector. He has made significant contributions to the field of agricultural machinery as well. With over 17 years of experience as an IT architect, he has successfully applied advanced technologies to solve complex problems across various industries. His latest research includes "Key Reasons for Hallucinations in Large Language Models (LLMs) and How to Fine-Tune Hyperparameters to Mitigate Them."

Introduction

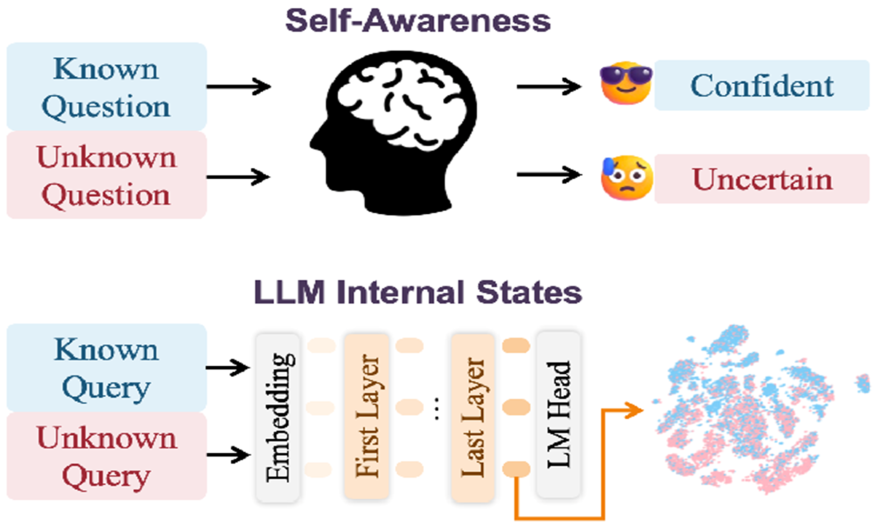

Large Language Models (LLMs), i.e., GPT, have transformed natural language comprehension and generation to a great extent. The models that are trained in large corpora of text material have revolutionized many sectors, i.e., generation of content to customer support. One of the issues that is generally found in LLMs is that of hallucinations—where the model spouts plausible-looking yet factually inapt or unrelated facts. Knowledge of key reasons for hallucinations in LLMs and ways of fine-tuning hyperparameters to limit them can increase the consistency and precision of such models.

Key Reasons for Hallucinations in LLMs

Lack of Proper Training Material on Specific Topics LLMs get trained on large corpora that cover a wide range of subjects, yet learning material can be skewed in that there can be a lack of material on specific subjects. In a situation where input is received regarding such ill-represented subjects, the model is likely to generate hallucinations owing to a lack of sufficient instances during learning.

Linguistic Ambiguity Natural language is a fuzzy medium. Words or combinations of words can be interpreted in more ways than one based on situations, making it hard for LLMs to disambiguate and generate accurate results. In a state lacking explicit context or sufficient indicators, the model can generate a response based on a misinterpretation of input, resulting in a state of hallucination.

Overfit to Training Set of Patterns LLMs overfit to patterns in the set of learning material in that they learn to repeat or memorize associations in the learning set in place of learning to generalize to new input. Overfit results in the model generating a sentence that is syntactically fluent yet not factual or logically sensible.

Model Architecture Constraints Despite employing advanced architecture, LLMs such as GPT have deficiencies in dealing with long-term dependencies and reasoning. The model can produce hallucinated content when it is attempting to reply to complex questions that demand reasoning over a large context span of text, as it may not recall all the details that it requires.

Biases in Training Set The training set employed in training LLMs can be fraught with various types of biases, such as social, cultural, or factual biases. The set of biases can be in the form of hallucinations, i.e., such that the model can produce mistaken or skewed facts that conform to patterns learned in the set even when they happen to be untrue or misguiding.

Methods of Sampling (Top-K, Top-P, Temperature) The method of generation of text employed by the model—by using sampling mechanisms such as top-k sampling, top-p sampling (nucleus sampling), or varying the temperature—can affect the possibilities of hallucinations. Higher randomness settings can produce more imaginative yet possibly untruthful replies, whereas low randomness can produce more deterministic yet more formulaic replies.

How to Fine-Tune Hyperparameters to Lessen Hallucination

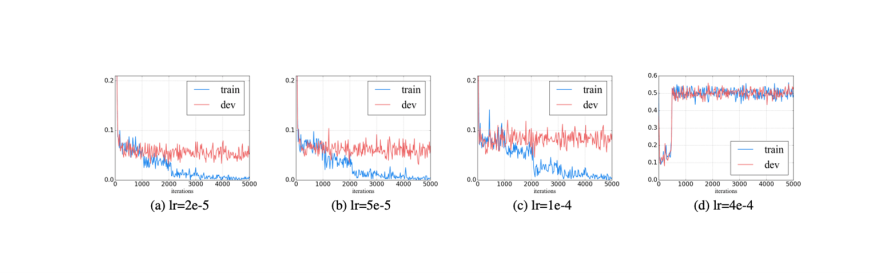

Temperature Adjustment The hyperparameter of temperature regulates randomness in the response of the model. An increased temperature (for instance, 1.0) increases randomness, providing more diverse yet possibly more hallucinated replies. Lower temperatures (for instance, 0.2) provide a more deterministic response to the model, providing more uncreative yet more accurate replies. Reducing hallucinations can be facilitated by decreasing the temperature to get the model to become more conservative and oriented towards more likely replies.

Fine-Tuning Tip: Set the temperature to a range of between 0.2 to 0.5 when you fine-tune it. This range stimulates the model to produce more realistic replies without excessive randomness that can lead to hallucinations.

Tuning Top-K and Top-P (Nucleus Sampling), Top-k sampling limits the options of the model to the top-k most likely words, while top-p sampling (nucleus sampling) limits the option to a set of words that collectively account for the top p% of the weight of probability. Both controls set the number of options that the model is allowed to choose between when it is generating text.

Top-K: Lowering the value of k forces the model to choose between fewer options, reducing the chances of hallucinations. Lowering k to a small number, however, can constrain the model from being too restrictive and uncreative.

Top-P: Lowering p (for example, to 0.8) restricts the model to a smaller set of likely words, possibly reducing the chances of material that is hallucinated.

Fine-Tuning Tip: Experiment between varying top-k between 30 to 100 and top-p between 0.8 to 1.0. Both settings help restrict hallucinations by restricting the range of likely word options.

Training on Specialized Material One of the most effective ways of restricting hallucinations is to fine-tune the model using material in each domain. If the model is to generate material in each domain (for example, in medicine, law, or science), training it on high-quality, relevant datasets will get it to focus on accurate and factual material in that given sphere. This reduces the chances of generating material that is hallucinated when the model is given questions in each domain.

Fine-Tuning Tip: Acquire domain-specific datasets and fine-tune the model using such datasets, ensuring the use of material for training that is diverse, plentiful, and factually accurate. For example, a medical application-oriented model should be trained using medical literature, medical guidelines, and factual repositories such as PubMed.

Incorporating Fact-Checking Mechanisms One of the more helpful ways to reduce hallucinations is to design fact-checking mechanisms in inference or training. Such mechanisms can be in the form of automatic systems that cross-reference material generated using trusted sources, databases, or APIs to check that it is factual.

Fine-Tuning Tip: Have a post-generation process of fact-checking using mechanisms such as retrieval-augmented generation (RAG) or external knowledge bases to verify that material is factual. In fine-tuning, you can also get the model to signal when it is not sure of a response and return a disclaimer response accordingly.

Regularization Techniques Regularization techniques such as weight decay and dropout prevent the model from being overfitted to patterns in the training set. Overfitting can perpetuate hallucinations in that the model is learning to memorize specific response phrases or answers in place of learning to generate more general material.

Fine-Tuning Tip: Implement dropout regularization in fine-tuning to get the model to learn more general patterns and to prevent overfitting to specific instances, which can result in hallucinations.

Using Contrastive Learning Contrastive Learning is a process that trains models to discriminate between accurate and inauthentic responses by providing contrasting instances during learning. By using contrastive loss functions, the model is incentivized to prefer responding in ways that agree with accurate facts and context.

Fine-Tuning Tip: Implement contrastive learning in the process of fine-tuning by providing pairs of accurate and inauthentic responses to teach the model to discriminate between factual, accurate material, and hallucinated material.

Monitoring and Iterative Fine-Tuning Hallucinations can be minimized by iteratively fine-tuning the model, using human feedback and regular monitoring of the behavior of the model. Gleaning feedback on the material created and using it to improve the model further can help reduce hallucinations over time.

Fine-Tuning Tip: Incorporate a feedback process in which human or machine systems periodically review model outputs and adapt the process of training accordingly based on observed hallucinations.

Conclusion

Hallucinations in LLMs are a serious issue, yet accurate tuning and training can largely control them. With hyperparameter tuning of temperature, top-k, and top-p, application of domain-specific training, regularization, mechanisms of fact-checking, and contrastive learning, LLMs can be conditioned to be more accurate and trustworthy. The most crucial action to take to reduce hallucinations is to apply a combination of these approaches in conjunction with regular checking and feedback to help the model constantly get better in its ability to generate factually accurate and contextual responses.

Ramanakar Reddy Danda Website: LINK

© 2025 ScienceTimes.com All rights reserved. Do not reproduce without permission. The window to the world of Science Times.