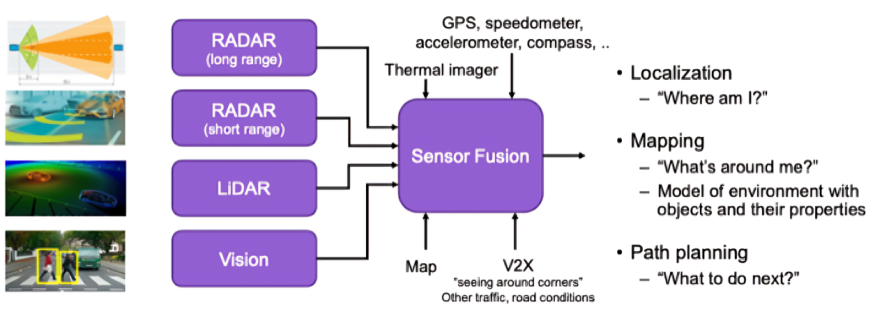

Sensor fusion and multi-sensor data integration are crucial for enhancing perception in autonomous vehicles (AVs) by using RADAR, LiDAR, cameras, and ultrasonic sensors. This technology allows AVs to build a detailed model of their surroundings, which is essential for navigation and obstacle detection. Each sensor has its strengths and weaknesses; for example, LiDAR provides accurate distance measurements, while cameras offer detailed visual cues. By combining these technologies, sensor fusion achieves more reliable environmental perception, enabling safer interaction with other road users. However, the process involves challenges like handling massive data volumes and ensuring real-time processing accuracy, which are key areas of ongoing research and development. Future improvements in sensor fusion are expected to increase safety and efficiency in autonomous driving through advanced algorithms and machine learning.

Understanding Sensor Fusion

Sensor fusion is a critical technology underpinning the development and operation of autonomous vehicles (AVs). It involves the integration of data from multiple sensor sources, such as RADAR, LiDAR, cameras, and ultrasonic sensors, to create a comprehensive model of the vehicle's environment[1][2]. This process is essential for the interpretation of environmental conditions with a high degree of detection certainty, which is not achievable by any single sensor working in isolation[2].

Importance in Autonomous Vehicles

The integration of inputs from diverse sensing technologies is crucial for autonomous vehicles. Each sensor type has its unique strengths and limitations, and by combining their outputs, sensor fusion provides a more accurate, reliable, and comprehensive understanding of the surrounding environment[1]. This enriched data model is vital for supporting the intelligent decision-making required for vehicle automation and control[3]. It enables AVs to operate safely and efficiently by significantly reducing the uncertainty inherent in data captured from a single sensor source[4].

Applications and Benefits

Sensor fusion is applied in various functionalities within AV systems, including obstacle detection, navigation, and the management of complex driving scenarios. It underpins the vehicle's ability to perceive and respond to static and dynamic elements within its environment, such as other vehicles, pedestrians, and road infrastructure[1][5].

Enhancing Decision-Making

By leveraging the combined strengths of multiple sensors, sensor fusion plays a major role in the decision-making processes of AVs. It allows vehicles to acquire a more detailed and accurate perception of their surroundings, facilitating safer and more reliable autonomous driving[6]. The fusion of sensor data enables the vehicle to better predict and react to potential hazards, thereby improving road safety and security[7].

Real-Time Processing and Efficiency

Efficient real-time processing is another significant advantage offered by sensor fusion. For autonomous driving applications, where timing and quick response are crucial, the ability to process and integrate data from multiple sensors in real-time enhances the vehicle's capability to perceive and adapt to changing traffic conditions and unexpected situations rapidly[8]. Moreover, certain approaches aim to reduce the computational load by focusing on the fusion of relevant sensor data, improving the speed and efficiency of the processing system[9].

Challenges and Future Directions

Despite its benefits, sensor fusion also presents several challenges, including the management of large volumes of data from various sensors, ensuring the accuracy and reliability of the fused data, and minimizing the computational resources required for real-time processing[10][11]. Future developments in sensor fusion technology will likely focus on improving the efficiency, scalability, and robustness of integration techniques. This includes the exploration of advanced computational methods and algorithms to enhance the accuracy and speed of autonomous driving systems[12].

Types of Sensors in Autonomous Vehicles

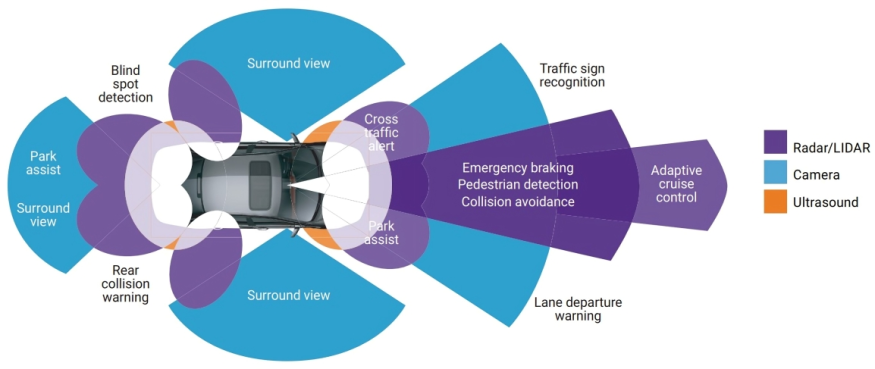

Autonomous vehicles utilize a range of sensors to navigate through environments safely. These sensors are critical for the vehicles' ability to detect and understand their surroundings, make decisions, and execute driving tasks without human intervention. The primary types of sensors employed in autonomous vehicles include LiDAR, RADAR, cameras, and ultrasonic sensors, each offering distinct capabilities and contributing to the comprehensive perception system of the vehicle.

Ultrasonic Sensors

Ultrasonic sensors use sound waves to detect objects around the vehicle, primarily serving in parking assistance and collision avoidance at low speeds. These sensors emit ultrasonic waves, which bounce off objects and return to the sensor, thereby measuring the distance to the object based on the time it takes for the waves to return[13]. Ultrasonic sensors are especially useful for close-range detection and maneuvering in tight spaces.

LiDAR Sensors

LiDAR (Light Detection and Ranging) sensors play a pivotal role in the sensor suite of autonomous vehicles. These sensors emit laser beams to measure the distance between the vehicle and objects in its environment, allowing for the creation of detailed three-dimensional maps of the surroundings[14][15]. LiDAR sensors are celebrated for their precision and the ability to create high-resolution images, making them a favored choice among autonomous vehicle manufacturers such as Google, Uber, and Toyota[14]. However, they are also known for being more expensive compared to other types of sensors[16].

RADAR Sensors

RADAR (Radio Detection and Ranging) sensors use radio waves to detect objects, measure their distance and relative speed, and are instrumental in navigating autonomous vehicles. These sensors are robust across various weather conditions and are available in short- and long-range variants to fulfill different functions. Short-range RADAR sensors are typically used for blind-spot monitoring, lane-keeping assistance, and parking aids, while long-range RADAR sensors are crucial for adaptive cruise control and emergency braking systems[14][17].

Camera Sensors

Cameras are indispensable for the perception systems of autonomous vehicles. They capture visual information similar to how human eyes perceive the environment, providing data on the texture, color, and contour of surroundings[7].

Cameras are particularly adept at recognizing and interpreting road signs, traffic lights, and lane markings. However, their effectiveness can be diminished by poor lighting or adverse weather conditions[7]. To overcome the limitations of monocular cameras, autonomous vehicles often employ stereo cameras to extract depth information through the disparity between images captured by two lenses positioned side by side[18].

Sensor Fusion and Multi-Sensor Data Integration

The integration and fusion of data from these diverse sensors are critical for the enhanced perception capabilities of autonomous vehicles. Sensor fusion involves combining the strengths of each sensor type to compensate for their individual limitations, thereby creating a comprehensive and reliable representation of the vehicle's environment[19] [20]. This integration is essential for ensuring the safety, reliability, and efficiency of autonomous driving systems.

Techniques and Algorithms for Sensor Fusion

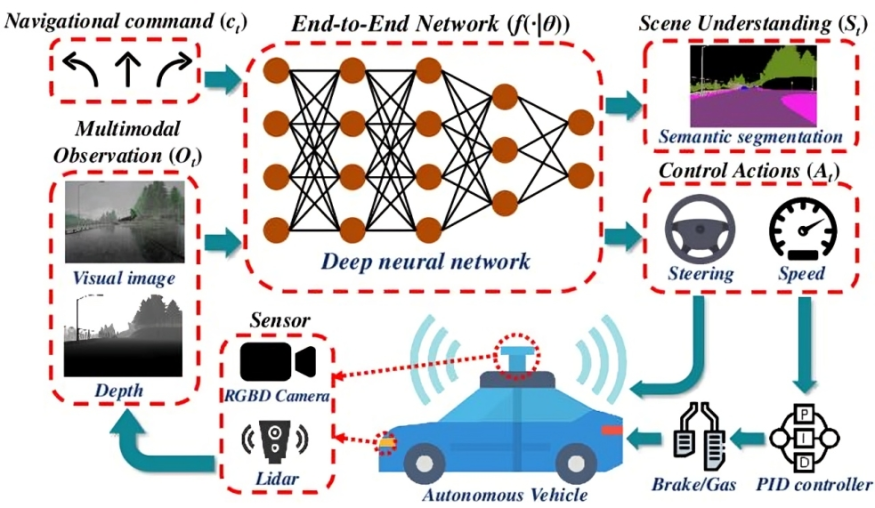

Sensor fusion and multi-sensor data integration are pivotal for enhancing perception in autonomous vehicles, facilitating accurate environmental understanding crucial for navigation and safety. Various techniques and algorithms have been developed and refined over the years to optimize the process of combining data from disparate sensor sources such as RADAR, LiDAR, cameras, and ultrasonic sensors. This section delves into some of the primary methods and computational models employed in the sensor fusion domain, highlighting their application in autonomous vehicle systems.

Kalman Filters

A cornerstone in the sensor fusion landscape, Kalman Filters, are used extensively for data fusion to estimate the state of a dynamic system. These algorithms are particularly adept at filtering out the noise from sensor data, thereby enhancing the accuracy of position and velocity estimates in real-time scenarios[21]. Kalman Filters operate on the principle of predicting a system's future state based on its current state while accounting for the inaccuracy and uncertainty inherent in sensor data. This predictive model is invaluable for autonomous vehicles, enabling them to navigate and react to their environment with a higher degree of precision.

Deep Learning Algorithms

In recent years, deep learning algorithms have emerged as a powerful tool for sensor fusion, enabling the integration of multi-sensor data to achieve more complex perception tasks[22][23]. Techniques such as Convolutional Neural Networks (CNNs) are fundamental in processing and analyzing images for tasks like object recognition, lane detection, and reading road signs[24]. Advanced deep learning architectures, including Faster R-CNN, YOLO (You Only Look Once), SSD (Single Shot Multibox Detector), and DSSD (Deconvolutional Single Shot Multibox Detector), are utilized for object detection and scene perception[25][26]. These models are capable of learning from large datasets, improving their ability to interpret sensor data synergistically, thereby enhancing the autonomous vehicle's understanding of its surroundings.

Fault Detection and Isolation Techniques

Ensuring the reliability of sensor data is critical for the safe operation of autonomous vehicles. Techniques for fault detection, isolation, identification, and prediction are essential components of a robust sensor fusion framework[27]. By employing machine learning and deep neural network architectures, these techniques can identify and mitigate the impact of faulty sensor data, ensuring that decision-making processes are based on accurate and reliable information. This layer of data validation is crucial for maintaining the integrity of the sensor fusion process, safeguarding against the potential consequences of acting on erroneous data.

Fusion of LiDAR and Optical Cameras

Combining LiDAR (Light Detection and Ranging) with optical cameras enhances the vehicle's perception capabilities, particularly in terms of depth perception and object recognition[14][28]. LiDAR provides precise distance measurements and 3D mapping capabilities, while cameras offer rich color and texture information. By fusing these data sources, autonomous vehicles can achieve a more comprehensive understanding of their environment, benefiting from the complementary strengths of each sensor type. This synergy is particularly effective in complex driving scenarios, where the detailed environmental model it provides can significantly improve navigational decisions and safety.

Applications of Sensor Fusion in Autonomous Vehicles

Sensor fusion, the process of combining data from different sensors to improve the accuracy and reliability of the resulting information, plays a crucial role in the development and operation of autonomous vehicles (AVs). This integration technique enhances the perception capabilities of AVs, allowing for more informed and safer decision-making processes. The applications of sensor fusion in autonomous vehicles are diverse and impact several key areas of their operation.

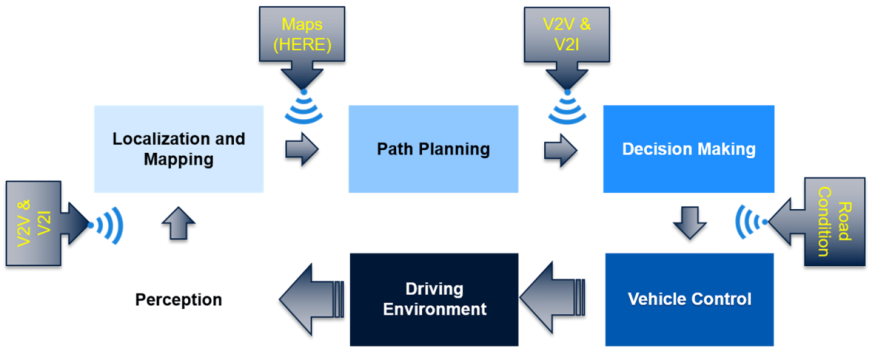

Perception and Environment Modeling

One of the primary applications of sensor fusion in autonomous vehicles is in the perception and environment modeling of the surroundings. Through the integration of data from multiple sensors, AVs can develop a comprehensive 3D model of their environment. This model includes the detection, identification, classification, and tracking of various objects, such as other vehicles, pedestrians, and obstacles[29]. The accurate and dynamic understanding of the environment is essential for the safe navigation and operation of AVs.

Localization and Mapping

Accurate localization and mapping are fundamental for autonomous vehicles to understand their position and navigate effectively. Sensor fusion techniques merge information from Global Navigation Satellite Systems (GNSS), cameras, LiDAR, and other sensors to achieve highly accurate positioning and mapping capabilities[25][30]. This multi-sensor approach addresses the limitations that individual sensors might face, such as GNSS inaccuracy in urban canyons or LiDAR performance in adverse weather conditions, thereby ensuring reliable navigation.

Obstacle Detection and Avoidance

The ability to detect and avoid obstacles is critical for the safety of autonomous vehicles. Sensor fusion enables the combination of visual data from cameras, distance measurements from LiDAR, and radar signals to identify potential hazards accurately. This comprehensive detection capability allows AVs to initiate appropriate avoidance maneuvers, such as braking or steering adjustments, to prevent collisions[24][31]. The integration of sensor data significantly enhances the vehicle's ability to respond to dynamic changes in its environment.

Decision-Making and Control

The decision-making process in autonomous vehicles heavily relies on sensor fusion to integrate environmental perception with vehicle control systems. By synthesizing data from various sources, AVs can make informed decisions regarding path planning, speed control, and steering[25]. This process involves evaluating the optimal and safest route to the destination while considering static and dynamic obstacles. Subsequently, the control system adjusts the vehicle's acceleration, torque, and steering angle to follow the selected path safely[25].

Enhanced Connectivity and Interaction

Sensor fusion also extends to the connectivity and interaction of autonomous vehicles with each other and with infrastructure (V2V and V2I technologies). By sharing sensor data among vehicles and infrastructure, AVs can gain a more comprehensive understanding of their environment beyond their immediate sensory field[25]. This connectivity enables more efficient traffic management, conflict resolution at intersections, and improves overall road safety by anticipating and reacting to potential hazards more effectively[32].

Future Research Directions

The ongoing development in sensor fusion technologies promises to unlock new capabilities and enhance the performance of autonomous vehicles further. Future research is expected to focus on leveraging advanced machine learning and deep learning algorithms for more efficient data integration and interpretation[25]. This includes improving the accuracy of environmental perception, reducing computational loads, and enabling more sophisticated decision-making processes in AVs.

Challenges and Solutions in Sensor Fusion

The integration of data from multiple sensors for enhanced perception in autonomous vehicles, known as sensor fusion, faces several challenges. These challenges stem from the inherent limitations of each sensor type, the complexity of the data integration process, and external factors that may affect sensor performance. However, various solutions have been proposed and implemented to address these challenges, enhancing the safety and efficiency of autonomous vehicles.

Data Complexity and Integration

One of the primary challenges in sensor fusion is managing the complexity of data generated by multiple sensors such as RADAR, LiDAR, cameras, and ultrasonic sensors[2]. Each sensor type provides a unique perspective of the environment, but integrating this data into a coherent model is challenging. This complexity can lead to increased latency and reduced real-time performance, which is critical for autonomous driving applications[33].

Solutions

To overcome the complexity of data integration, advanced algorithms have been developed. These include image processing algorithms trained on massive datasets to recognize objects and features with high accuracy[20]. Moreover, methodologies like ENet, which can perform multiple tasks such as semantic scene segmentation and monocular depth estimation simultaneously, have shown promise in managing data complexity efficiently[10].

Sensor Calibration and Environmental Factors

Sensor calibration is foundational for accurate sensor fusion. However, multi-sensor systems are commonly factory-calibrated, and external factors like temperature and vibrations can affect their accuracy[19].

Solutions

Ongoing research into online and offline calibration techniques aims to detect and refine calibration parameters automatically. These developments are critical for the precise estimation of objects' presence and position, ensuring reliable obstacle detection for autonomous vehicles[19].

Centralized vs. Decentralized Fusion

The debate between centralized and decentralized data fusion approaches presents another challenge. In centralized fusion, all data is sent to a central location for processing, which can create a bottleneck and increase response times. Decentralized fusion, on the other hand, distributes the processing load across multiple platforms, but coordinating the fusion process can be complex[4].

Solutions

Both centralized and decentralized approaches have their advantages, and the choice between them often depends on the specific requirements of the autonomous system. For instance, decentralized systems offer advantages in scalability and fault tolerance, as each sensor or platform can make independent decisions to some degree[4].

Sensor Limitations and Environmental Noise

Another challenge is the limitations of each sensor type and the environmental noise that can affect sensor readings. For example, LIDAR sensors can generate millions of data points per second, and high-resolution cameras capture vast amounts of pixel information. Filtering and integrating these data streams while accounting for uncertainties and noise requires sophisticated algorithms[33].

Solutions

Intelligent decision subsystems that understand the risk analysis of autonomous operations have been developed to address these challenges. These systems focus on algorithms like the Support Vector Machine (SVM) to detect and isolate sensor faults, improving the reliability of sensor fusion in autonomous vehicles[27].

Future Directions and Trends

The landscape of autonomous vehicles (AVs) and their operation is continuously evolving, with sensor fusion and multi-sensor data integration at the forefront of enhancing perception and navigation capabilities. The integration of advanced technologies such as machine learning, big data analysis, and more sophisticated sensor fusion algorithms is paving the way for significant advancements in this field. This section highlights the anticipated future directions and trends in sensor fusion and multi-sensor data integration for autonomous vehicles.

Enhanced Data Processing Algorithms

The development of more sophisticated data processing algorithms is crucial for improving the accuracy and reliability of sensor fusion methods[34]. With the increasing complexity and volume of data generated by the multiple sensors equipped in AVs, advanced algorithms capable of efficiently processing and analyzing this data are necessary. These algorithms will focus on minimizing false positives and negatives, thereby enhancing the decision-making process in AVs[2][34].

Advanced Sensor Fusion Techniques

Future trends include the advancement of sensor fusion techniques that leverage the strengths of various sensing technologies, including RADAR, LiDAR, cameras, and ultrasonic sensors[2]. The integration of these technologies allows for a comprehensive understanding of the environment, essential for the safe operation of autonomous vehicles. Advanced sensor fusion techniques will continue to evolve, focusing on optimizing the combination of sensor data to achieve the highest degree of detection certainty and operational safety[21].

Integration with Machine Learning and Big Data

The application of machine learning and big data analytics in sensor fusion systems is a significant trend that is expected to continue growing[8]. These technologies offer the potential to enhance the performance of autonomous vehicles by enabling more accurate and dynamic interpretations of sensor data. Machine learning algorithms can learn from vast amounts of data, improving the system's ability to predict and respond to environmental changes. The integration of big data analysis further supports the continuous improvement of sensor fusion methodologies by analyzing large datasets to identify patterns and insights[8].

Real-Time Dynamic Mapping

Employing multi-sensor data integration for real-time dynamic mapping presents a promising direction for autonomous vehicle navigation[8]. By continuously updating the map with minimal necessary changes based on real-time data from multiple sensors, AVs can navigate with greater precision and confidence. This approach addresses the challenge of real-time driving in unpredictable environments and is a step towards more autonomous and reliable vehicle operations[8].

Safety and Efficiency Evaluation Methods

As the number of unmanned vehicles increases, establishing objective methods to evaluate the system's safety, performance, and efficiency becomes imperative[12]. These evaluation methods will assess the effectiveness of sensor fusion and multi-sensor data integration technologies in enhancing the operational capabilities of autonomous vehicles. Ensuring safety and efficiency in increasingly complex traffic situations is a key focus area for future research and development[12].

References

[1] : Mobility Insider. (2020, March 3). What is sensor fusion? Aptiv. Retrieved from https://www.aptiv.com/en/insights/article/what-is-sensor-fusion

[2] : RGBSI. (n.d.). Sensor fusion in autonomous driving systems: Part 1. RGBSI Blog. Retrieved May 1, 2024, from https://blog.rgbsi.com/sensor-fusion-autonomous-driving-systems-part-1

[4] : Sensor fusion. (n.d.). In Wikipedia. Retrieved May 1, 2024, from https://en.wikipedia.org/wiki/Sensor_fusion

[5] : Rigoulet, X. (2021, December 5). The importance of sensor fusion for autonomous vehicles. Digital Nuage. Retrieved from https://www.digitalnuage.com/the-importance-of-sensor-fusion-for-autonomous-vehicles/

[6] : Ignatious, H. A., Sayed, H.-E.-, & Khan, M. (2022). An overview of sensors in Autonomous Vehicles. Procedia Computer Science, 198, 736–741. https://doi.org/https://doi.org/10.1016/j.procs.2021.12.315

[7] : Ahangar MN, Ahmed QZ, Khan FA, Hafeez M. A Survey of Autonomous Vehicles: Enabling Communication Technologies and Challenges. Sensors. 2021; 21(3):706. https://doi.org/10.3390/s21030706

[8] : Seo, H., Lee, K., & Lee, K. (2023). Investigating the Improvement of Autonomous Vehicle Performance through the Integration of Multi-Sensor Dynamic Mapping Techniques. Sensors (Basel, Switzerland), 23(5), 2369. https://doi.org/10.3390/s23052369

[9] : Fayyad J, Jaradat MA, Gruyer D, Najjaran H. Deep Learning Sensor Fusion for Autonomous Vehicle Perception and Localization: A Review. Sensors. 2020; 20(15):4220. https://doi.org/10.3390/s20154220

[10] : Neven, D., De Brabandere, B., Georgoulis, S., Proesmans, M., & Van Gool, L. (2017). Fast scene understanding for autonomous driving. In Deep Learning for Vehicle Perception, workshop at the IEEE Symposium on Intelligent Vehicles. https://doi.org/10.48550/arXiv.1708.02550

[11] : Kumar GA, Lee JH, Hwang J, Park J, Youn SH, Kwon S. LiDAR and Camera Fusion Approach for Object Distance Estimation in Self-Driving Vehicles. Symmetry. 2020; 12(2):324. https://doi.org/10.3390/sym12020324

[12] : Sziroczák D, Rohács D. Automated Conflict Management Framework Development for Autonomous Aerial and Ground Vehicles. Energies. 2021; 14(24):8344. https://doi.org/10.3390/en14248344

[13] : RGBSI. (n.d.). Sensor fusion in autonomous driving systems: Part 2. RGBSI Blog. Retrieved May 2, 2024, from https://blog.rgbsi.com/sensor-fusion-autonomous-driving-systems-part-2

[14] : Khvoynitskaya, S. (2020, February 11). Autonomous vehicle sensors. Itransition Blog. Retrieved from https://www.itransition.com/blog/autonomous-vehicle-sensors

[15] : Dawkins, T. (2019, January 14). Sensors used in autonomous vehicles. Level Five Supplies. Retrieved from https://levelfivesupplies.com/sensors-used-in-autonomous-vehicles/

[16] : Poor, W. (2023, June 28). LiDAR, Tesla, autonomous cars, Elon Musk, Waymo. The Verge. Retrieved from https://www.theverge.com/23776430/lidar-tesla-autonomous-cars-elon-musk-waymo

[17] : Vargas J, Alsweiss S, Toker O, Razdan R, Santos J. An Overview of Autonomous Vehicles Sensors and Their Vulnerability to Weather Conditions. Sensors. 2021; 21(16):5397. https://doi.org/10.3390/s21165397

[18] : Yeong DJ, Velasco-Hernandez G, Barry J, Walsh J. Sensor and Sensor Fusion Technology in Autonomous Vehicles: A Review. Sensors. 2021; 21(6):2140. https://doi.org/10.3390/s21062140

[19] : Yeong, J., Velasco-Hernandez, G., Barry, J., & Walsh, J. (2021). Sensor and Sensor Fusion Technology in Autonomous Vehicles: A Review. Sensors (Basel, Switzerland), 21(6), 2140. https://doi.org/10.3390/s21062140

[20] : Kalolia, N. (2023, September 25). How image processing is helping self-driving cars navigate the road to the future. Medium. Retrieved from https://nakshikalolia.medium.com/how-image-processing-is-helping-self-driving-cars-navigate-the-road-to-the-future-9fa828ed9980

[21] : (2021, May 24). Sensor fusion – LiDARs & RADARs in self-driving cars. Think Autonomous. Retrieved May 1, 2024, from https://www.thinkautonomous.ai/blog/sensor-fusion/

[22] : Boesch, G. (n.d.). Image recognition. Viso.ai. Retrieved from https://viso.ai/computer-vision/image-recognition/

[23] : Gupta, A., Anpalagan, A., Guan, L., & Khwaja, A. S. (2021). Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues. Array, 10, 100057. https://doi.org/https://doi.org/10.1016/j.array.2021.100057

[24] : Bansal, M. (2023, November 28). Image processing in autonomous vehicles: Seeing the road ahead. Medium. Retrieved from https://medium.com/@mohanjeetbansal777/image-processing-in-autonomous-vehicles-seeing-the-road-ahead-b400d176f877

[25] : Fayyad, J., Jaradat, M. A., Gruyer, D., & Najjaran, H. (2020). Deep Learning Sensor Fusion for Autonomous Vehicle Perception and Localization: A Review. Sensors (Basel, Switzerland), 20(15), 4220. https://doi.org/10.3390/s20154220

[26] : Lorente, Ò., Riera, I., & Rana, A. (2021, May 11). Scene understanding for autonomous driving. Retrieved from https://doi.org/10.48550/arXiv.2105.04905

[27] : Safavi S, Safavi MA, Hamid H, Fallah S. Multi-Sensor Fault Detection, Identification, Isolation and Health Forecasting for Autonomous Vehicles. Sensors. 2021; 21(7):2547. https://doi.org/10.3390/s21072547

[28] : Alsubaie, N. M., Youssef, A. A., & El-Sheimy, N. (2017). Improving the accuracy of direct geo-referencing of smartphone-based mobile mapping systems using relative orientation and scene geometric constraints. Sensors (Switzerland), 17(10). https://doi.org/10.3390/s17102237

[29] : LeddarTech. (n.d.). Sensor fusion & perception technology fundamentals FAQ. LeddarTech. Retrieved May 1, 2024, from https://leddartech.com/sensor-fusion-perception-technology-fundamentals-faq/

[31] : Udacity. (2021, March 3). How self-driving cars work: Sensor systems. Udacity Blog. Retrieved May 1, 2024, from https://www.udacity.com/blog/2021/03/how-self-driving-cars-work-sensor-systems.html

[32] : Liu, C., Lin, C.-W., Shiraishi, S., & Tomizuka, M. (2018). Distributed conflict resolution for connected autonomous vehicles. In IEEE Transactions on Intelligent Vehicles (Vol. 3, Issue 1). https://www.ri.cmu.edu/publications/distributed-conflict-resolution-for-connected-autonomous-vehicles/

[33] : Parida, B. (2023, May 17). Sensor fusion: The ultimate guide to combining data for enhanced perception and decision-making. Wevolver. Retrieved from https://www.wevolver.com/article/what-is-sensor-fusion-everything-you-need-to-know

[34] : ScienceDirect. (n.d.). Sensor fusion. ScienceDirect. Retrieved from https://www.sciencedirect.com/topics/engineering/sensor-fusion